Introduction

The main intention of the blog is to showcase the power of Pega’s advanced concepts like Text Analytics (NLP), Decisioning and Marketing.

For this article, we focused mainly on Pega NLP and decisioning capabilities to achieve the below use case.

Use Case

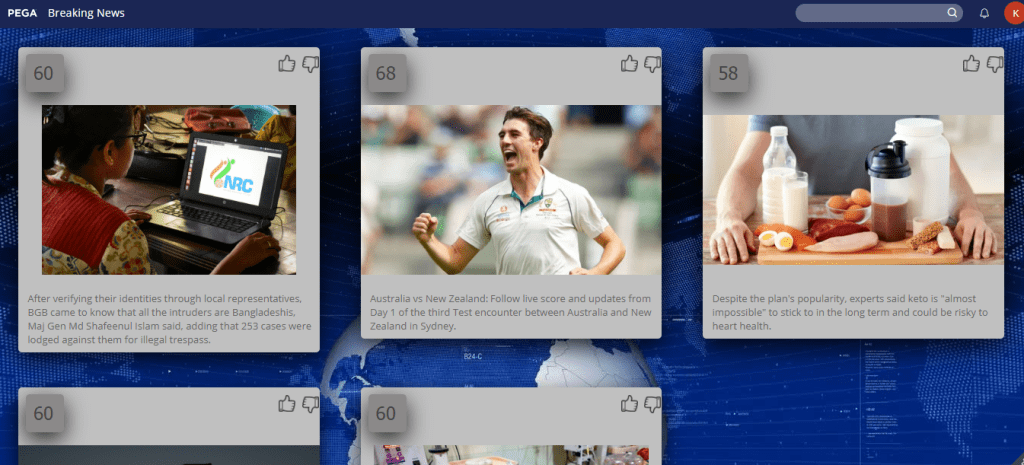

As a media organization, the goal of the organization is to show the relevant quality of news based on their user’s responses whether they like and read the given news feed.

As this being the first blog, I have tried to capture and elaborated on the concepts based on my leanings and expertise.

To illustrate the above snapshot, there is a 68% chance that the news audience can read the sports feed!

If the audience interested to read it by clicking the news tile, then the system automatically learns from it and start calculating the next propensity (chance of reading the news feed)

Concept

The above high-level concept diagram explains how the system does sort out general news feed to fetch out more likable news feed based on the text analytics and machine learning capabilities.

By Pega NLP and Decisioning capabilities, it is quite easy to implement the defined architecture. So, lets first talk about how Pega Text Analytics can help to categorize the given news feed before we feed it to the AI component.

News Feed

Thanks to https://newsapi.org/ for an open API to fetch world breaking news. This API based on the REST protocol provides news content as shown below.

Text Analyser (A Natural Processing Language component)

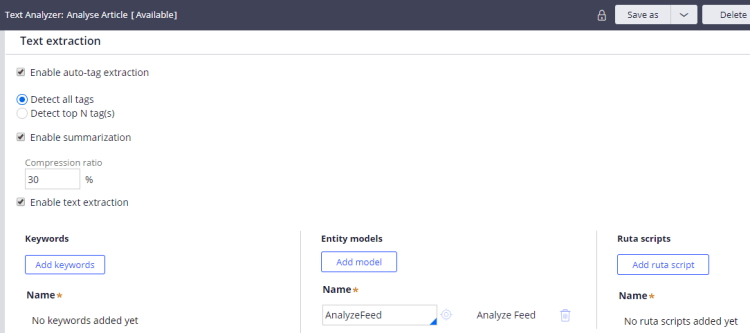

Let’s understand how we can extract buzz words from each article using the Pega Natural Language component. To extract such keywords, one must create a Text Analyser and configure it in such a way that it extracts tags, Sentiment and of course Entities (Personas, Organizations, Places, etc) based on an Entity model.

As you see below, we have created a Text Analyzer to extract Buzz words, Sentiment and key entities using Entity model which is been trained with quite a good amount of variety of sentence patterns to extract as mentioned above.

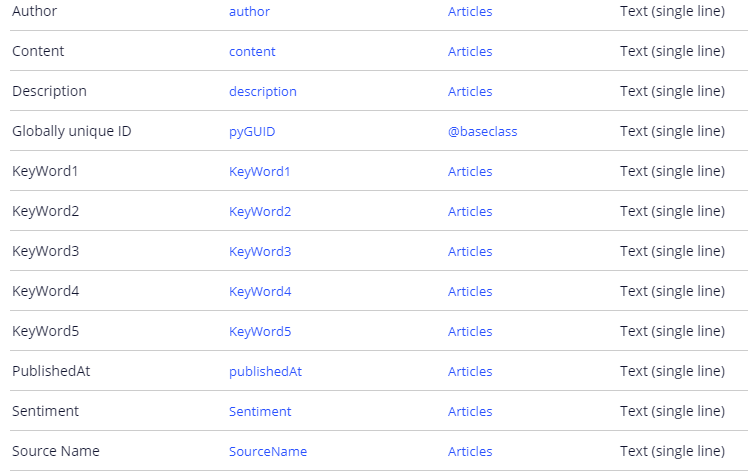

Once we have extracted such key/buzz words and sentiment data from an article, the following is our data model to feed the extracted data along with main article recognizable entities to Pega Decisioning (Adaptative and Predictive analytics)

Pega Decisioning

As all of you know, any Machine Learning concepts you come across aligns with the below steps to define Intelligence to the System learn it on own and scale automatically. To build a proper Machine Learning cognitive functionality, one must build a MODEL then TRAIN the defined the model and provide FEEDBACK to the model.

Adaptive Model

To define an Adaptive Model, we just need to have the model predictors. As mentioned above the extracted buzz words, sentiment and article source provider are considered the first set of model predictors.

Why I said the first set of predictors is that because we can’t say these predictors will help us to predict the articles will be liked by the audience or not right, so over some time based on the trained data, we can have look at the performance of these predictors which is discussed below in the blog.

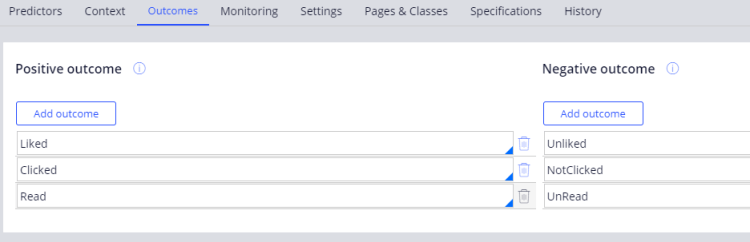

Once we defined the appropriate predictors, next defined what are all actions can be considered as Positives and Negatives as defined below.

Strategy and Propositions

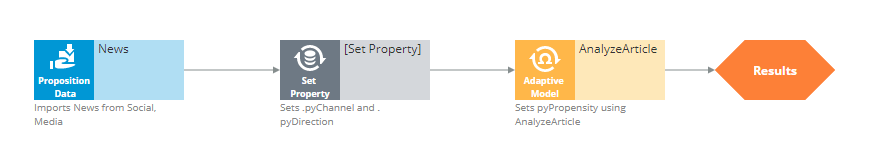

We need to define a proposition to which the above adaptive model can be applied to get an appropriate chance of reading propensity for each news feed via News API. So, we have defined the following proposition and a strategy flow to process each news feed.

Now define a strategy as below using for each news feed to get appropriate propensity to display. The below strategy gets executed whenever an end-user login to the breaking news portal.

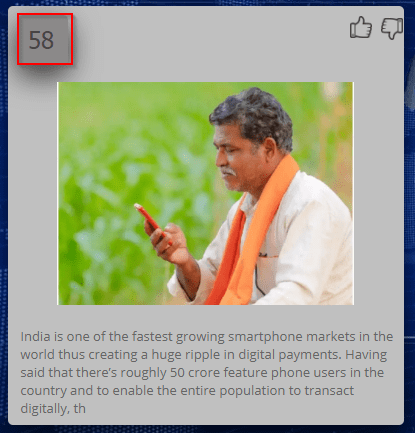

The outcome of the above strategy is shown in the below news feed tile (highlighted in the red box)

Figure 1 Chance of reading this news is 58%

Train and Monitor

As mentioned above, once we build a Model then we train the model with a variety of data to define and structure the most accurate predictors depending on the model performance. Let’s understand it in detail!

Define a Data Flow which fetches the variety of news feed which has different unique buzz words and sentiment (Sad, Happy and Neutral) The outcome of the strategy results should be written to the modal analytics and interaction history.

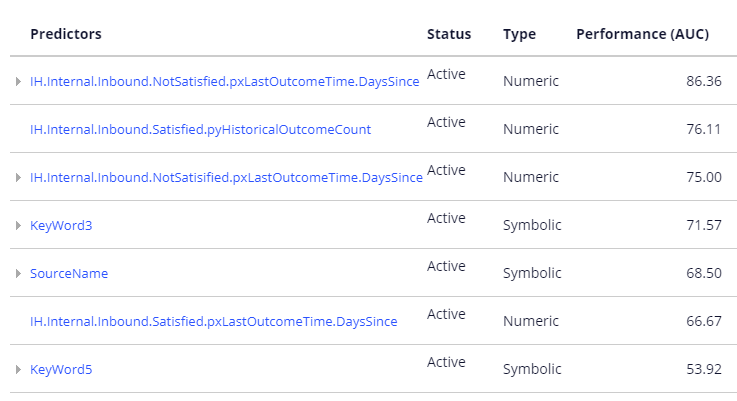

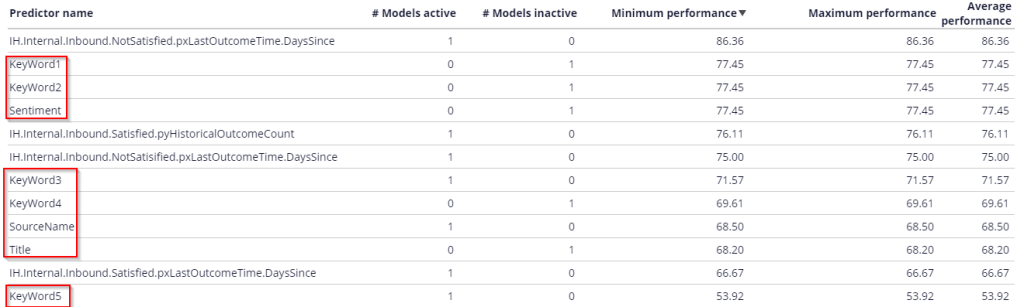

Once trained with a good amount of data, then go to the defined Adaptative Model and look for model statistics to understand how the defined predictors are performing as shown in below.

As you see, some of the predictors are not active and some of them are active. Overall as a data analyst and architect, you need to make sure the right predictors are considered based on the average performance.

Capture Live Response cum Feedback

Once the performance of the model is satisfactory then it can be deployed to the downstream system and start capturing the real data.

So, in our example, if an end-user read a news tile based on his interest then the system captures it as a positive response and starts feeding response back to the system to bring more of a relevant news feed from the feed.

In the below image, you can see the history of the different audiences’ responses to the variety of news feeds.

Conclusion

So we have seen how Pega’s AI can help the different industry solutions to help their end customers to use trending technology with lots of insights.

What Next?

That’s good that the solution brings good quality news based on the real-time user responses but if you see there is plenty of ‘Not Satisfied/ Un-Read/Not-Clicked’ news feed in the data warehouse, right? Let me make this an opportunity to bring the concept of Advanced Pega Marketing here to help our news audiences to forecast what they miss to read!!

Leave a comment